Apologies for the double-mailing of yesterday’s post. I screwed up the schedule.

Some rulers say there is a nitrogen "crisis", and others say there is a climate "crisis". There is no nitrogen "crisis", and there is no climate "crisis", neither. But since those who rule over us think these crises exists, it is worth investigating why they think so.

One reason (well known to regular readers) is models: too much unjustified trust in Expert models.

The AERIUS/OPS model (OPS for short) is a model which, among other things, measures certain forms of nitrogen (and other chemicals) deposition. It's used extensively in the Netherlands by rulers to make consequential decisions. Such as ordering the closing of farms.

An open question, for which I have no answer, is where these rulers think their food will come from after they save us all from cow farts? China? Albert Heijn? You tell me.

Anyway, my friends Geesje Rotgers and Jaap Hanekamp and I have a new paper: Criticizing AERIUS/OPS Model Performance</a>. Download and read at your leisure. If you're in a hurry and don't have spare toeslaag in your ogenblikkelijk, go to Hanekamp's place to read a summary in Dutch. Or read this post.

The paper is long and detailed, but not especially complicated. I'll focus here on one or two aspects of model validation---also called forecast verification---which are more unfamiliar, but which are crucial to grasp.

Now model validation isn't easy. It requires time and money: at least as much, and usually more, time than it took to create the model, and is therefore expensive. Facts which tend to discourage thorough vetting.

One way, and the worst way, is to test the model on already known data (a.k.a. observations). This is because (a) it's always possible to build a model which predicts perfectly old observations, and (b) the easiest person to fool is yourself, and (c) the second easiest person to fool is the model user.

This makes the only true way to test a model is on observations never before seen or used in any way in building that model.

Those who built OPS, or some of them, knew this. Some true validations were attempted. But not many, and certainly not thoroughly. The verification measures used were inferior, and there was no accounting for skill. Skill as it is defined formally.

SKILL

I have attempted to explain skill many times, on the blog, in papers, and in a book. I can't make it stick. Maybe you have better ideas how to describe it. Skill is a commonplace in meteorological modeling, far less so (for obvious reasons) in climatological, and almost unknown elsewhere. But it should be everywhere.

This is skill: if you have two models, A and B, and B is better than A, then you should not use A, but should use B. B has skill over A.

Now I ask you: is that complicated? True, we have to define "better". But that's easy. It means what it says: better as defined by whatever performance measure you picked. B having skill over A doesn't mean, or imply, B is any good or should be used, of course. Just that if you were forced to pick, and such things as cost or availability weren't important, you'd pick B.

Yes?

OPS does not have skill with respect to a simple "mean" model.

That is, if we're predicting NH3 (or SO2 or whatever) deposition at some location, and we knew the mean over the time of prediction and used that mean as a prediction, that model (for it is indeed a model) would often beat the vastly more sophisticated OPS---using the performance measures selected by those who rely on the model.

Our paper describes these measures. One is "fractional basis."

FB = 2 x [ ( mean(Y) - mean(X) ]/[ ( mean(Y) + mean(X) ],

where Y is the observations and X the model prediction.

This is best when FB=0, which happens when the mean of the predictions equals the mean of the observations. Matching means is, of course, a desirable trait, but it is the weakest of verification measures, because, experience shows and as we show in the paper, even poor models may have equal means with observations.

Such as the mean model. Which will always nail, absolutely kill, the FB. How can it not?

That doesn't mean you should use the mean model. That is the Do Something Fallacy, so beloved by many. It means you should fix OPS. Read the paper for details, and for times where OPS might sometimes (not often) have skill over the mean, using FB and other measures.

FOOLING YOURSELF

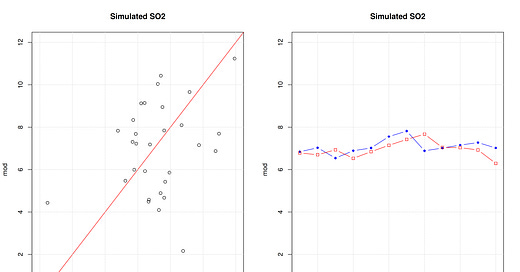

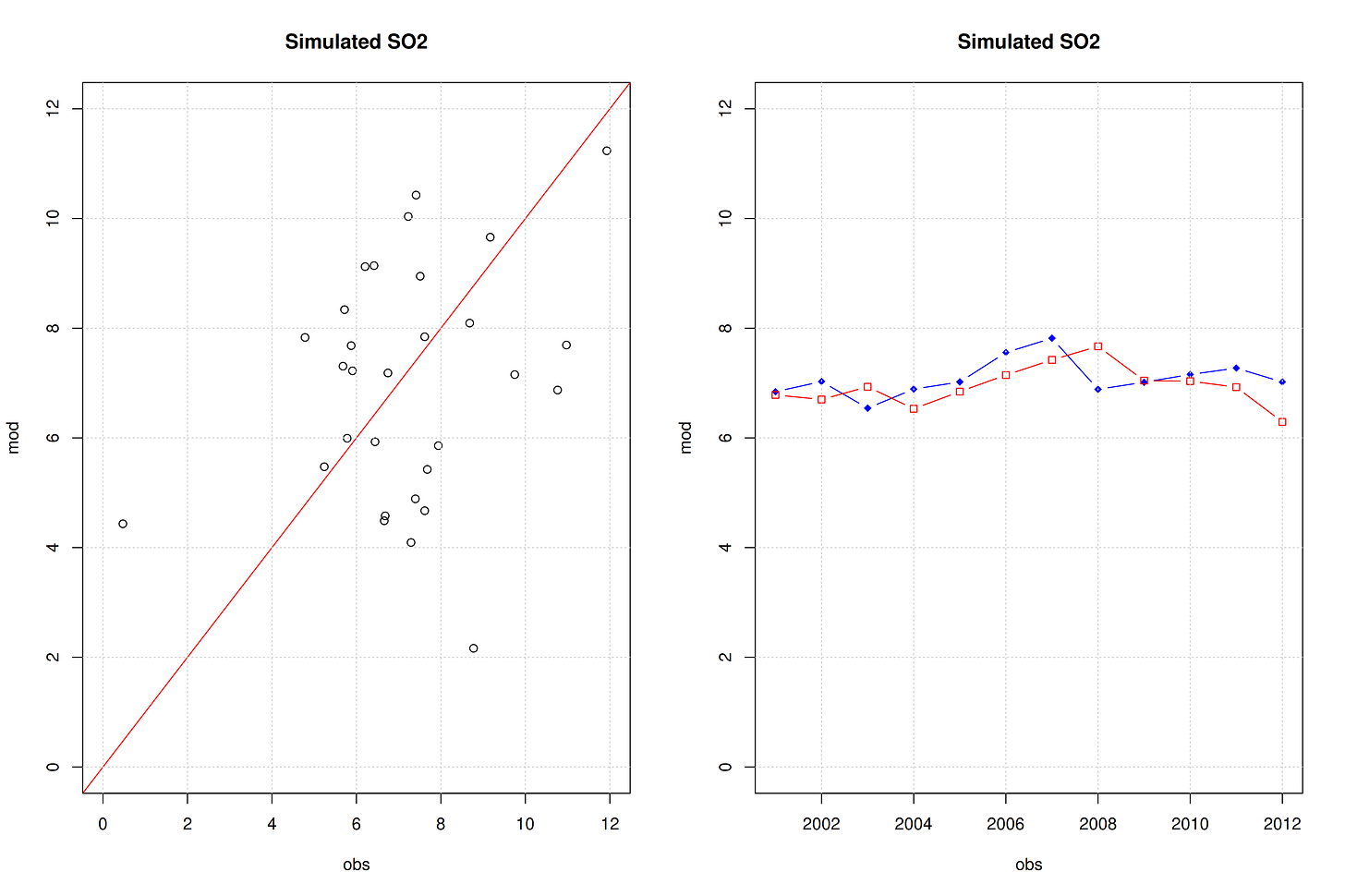

A prime way to fool yourself is aggregations, or averaging. Formal OPS validations played this trick on themselves. Here's a picture to show how easy this is

For whatever reason, OPS validation papers always invert the axes, putting the observations on the x-axis and predictions on the y-axis. We followed suit. The left panel is a bunch of made up numbers, no correlation between observation and prediction, except that both sets have the same mean. The red is a one-to-one line on which all dots would lie if the model here were perfect (notice, though, how that red line can fool the eye: it seems to draw the points closer to it).

The model isn't perfect. It can't be. It's made up. It stinks. On purpose. But suppose the left picture was days in a month. What if we took the average of those days, then the average of each month over a year. Then plotted those averages over the course of several years.

They'd look like the right panel---which is what we did with more made-up numbers. All of a sudden, the model looks fantastic! Wow! Look at how close those lines are to one another!

These are still all numbers completely made up, with zero correlation between them, except they agree in the average. The correlation after averaging suddenly appears.

This proves that you can easily fool yourself in validations using averages. (Somewhat hilariously, even this made-up model would not have skill next to the observed mean model, with the FB as a score. Old time readers will recall we have seen this trick used many, many times in climate model validations.)

TRIVIALITY

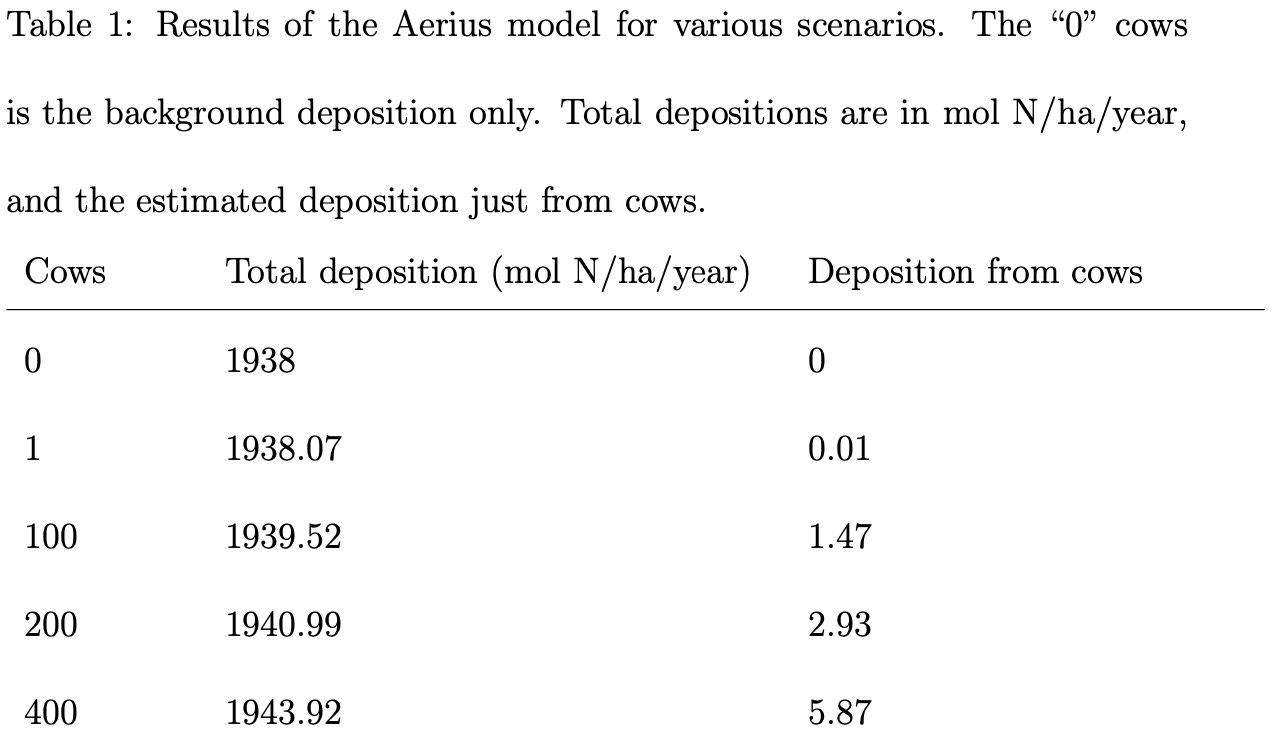

We also asked: even if the OPS is perfect, is it important? One of the concerns rulers have is how nitrogen from dairy farms affects so-called natural areas. So we ran the model using typical conditions, and looked at deposition at a nature area from a simulated farm. Here's the results:

One of the schemes rulers have is forcing farmers to give up half their cattle. To "solve" the nitrogen "crisis." At least for this run, halving from 400 to 200 cows leads to a reduction of about 3 moles of nitrogen per hectare per year at the nature area (the hexagon with the largest deposition).

Eliminating all cows leads to a non-whopping 5 moles of Nitrogen per hectare per year.

We go into details in the paper, but it must be obvious that even measuring that trivial difference is difficult, or impossible. Plus the atmospheric measures the Netherlands now uses (the MAN data) are estimates themselves. Meaning they have uncertainty in them.

Of course, we do not pretend these runs are a complete review of OPS. But they are not unusual. Add to that the weak and unskillful performance of OPS, and we can't be certain what is going on.

DECISIONS

"Okay, Briggs. You convinced me. OPS needs fixing. But we have to do something, so we have no choice but to use it until something better comes along. We need to alleviate the nitrogen crisis."

No you don't. You don't have to do anything. That's the Do Something Fallacy again.

Even worse, the reason you believe there is a crisis is because of the model. You're arguing in a circle. You can't say with any certainty there is a crisis. Even if you believe there is, and you believe OPS, you won't be able to measure with any reasonable degree of certainty whether your "solutions" had any effect.

Besides all that, if you do implement your "solutions" and close down the farms where are you going to get your food?

Buy my new book and learn to argue against the regime: Everything You Believe Is Wrong.

Visit wmbriggs.com.

This whole model thing is completely bizarre. Humanity has wound itself up into one big circle jerk.

Look at all the covid models, all completely wrong. It didn't translate to the real world at all. The same thing is going on here again.

I think there is an aspect that people don't want to consider in that "STEM" or perhaps "Science", "Statistics", etc. generally are not the end all be all to helping humanity and I would state that they are actually harming humanity at this point.

Then again, I am biased as I believe "progress" to be a hoax in many regards.

Our benevolent rulers are trying to save us from nitrogen pox.