Statistical Challenge: Can You Rate How Good This Study Was?

I will lose many of you today. But for those up for an intellectual challenge, this post is for you. This will not be easy.

Let’s play a game. I’m going to give you the outline of a study, a real one. I want you to say whether it’s a good, bad, or in-between study. I want you to judge if the conclusion the researchers made can be justified, or if you see flaws they might have over-looked.

I’m not going to tell you what the study is about. The reason is that if I did give the frame some of you would be prejudiced one way or the other. From my experience with you, my friends, I’d say you will be split about fifty-fifty on this topic. I don’t want pre-formed judgments playing a role here.

We have to do this on the honor system. It’s too easy to search and discover what this study really was. Don’t do that. Or, if you do, keep silent in the comments. I want you to have an honest go at this, and see if you have learned the lessons I have been trying to teach these many years.

To make it as fair as possible, and to cut down cheating, I’m going to paraphrase everything, and I’ll describe, but not show, the relevant graphs. Reverse image searches are too good and you’d find it in a hurry. If I change any numbers, all these changes will be scrupulous and won’t change our conclusions, as you’ll discover next week when I reveal the answer.

You have one week.

The study is medical and looked at a certain kind of measured harm between two groups of kids, labeled U and N, and some alleged bad things in the blood. They also tracked the number of times the kids had a certain procedure.

The main question was this: is there less B in kids in U or N, and could smaller amounts of B be caused by bad things in the blood, moderated by the procedure.

The two groups were pretty well matched. The ages, weights, sizes, parents’ ages and all that kind of thing was as close as you’re going to get between two groups. Again, the only named ostensible difference between the groups was the kids getting U or N.

U is unnatural, and N is natural. N is something that happens naturally, whereas the kids (and their parents) had to go out of their way to avoid N and get U, which was artificial and manufactured. The strange thing is the conclusion: the natural kids (N) had purportedly worse outcomes!

They also say that there were more bad things in the blood leading to lower levels of B in the natural group (N).

Here are the steps the researchers followed.

1. They looked only at healthy kids from both U and N. Any kid that had any kind of malady was excluded. The kids were reportedly raised normally in an advanced Western country.

2. The outcome was a certain measure of blood; call it B. B was a change from one time point to another. B itself is seen as a good thing, with high values better than low. But again, whatever values the kids had, no kid had any malady. This was a study only to measure the potential of a malady, which no kid reportedly had (but see below).

3. They recruited 19 Us and 79 Ns. Then they measured the blood for B and other things on all of these kids. And they also measured blood on their mothers.

4. The researchers noticed something odd in 35 of the kids. We don’t know the mix, U and N, just the total number. They re-measured the blood on these 35 kids, and not the other 63, because something appeared odd in their blood, but they don’t say much about what was odd. Only that it wasn’t B. Another 14 kids had an increased value in another blood measure that was not B. Again, we don’t know how many were U and how many N, or if the 14 were part of the 35. So they did fresh blood draws on these kids.

5. All of the blood measurements used standard protocols and procedures.

6. After a government report was issued on U and N and the alleged bad things in the blood, the researchers went in and gathered more blood.

7. Next was the stats. They did a regression model on the changes of B and the timing of a certain procedure. All kids had the procedure at least once; some had it more than once. The procedure, call it P, was not related to U or N. All the analysis the researchers presented, with one exception, was based on the regressed values and not the raw data. All downstream analyses used these regressed values, and not the raw data.

8. Because there were other measures in the blood besides B, that might influence or be influenced by B, i.e. those alleged bad things, they included these measures in the model with the procedure timing, using what is known as a stepwise regression to find the “best” model (with respect to a statistical criterion we can ignore). The group U and N were, of course, also in the model.

9. To check this model, they used a smoother regression, cut the data into differently sized chunks, and the original model may or may not have been adjusted because of how B looked in the chunks. They are light on details here.

10. Now in 41 of the kids, there was observed some sneezing or coughing. But we don’t know how many were U or N. They then looked at a common marker for inflammation and discovered 22 kids had high levels. Again, we don’t know how many were U or N. Nor do we know the levels of alleged bad things in any of these kids.

11. Data comparing measurements of the blood between U and N were then presented. Not of B, but of other things in the blood. Things that are considered bad. The U (artificial) group had lower mean values of bad things for almost all bad things, and the N (natural) had higher. The ranges of the N group were wider, but this can be accounted for by the much larger sample size.

12. One school of thought said that the bad things should have been higher in the artificial group, but another said it should have been higher in the natural. This is the fifty-fifty split we discussed.

13. A curious plot was offered based on the model. Since the U group could not do what the N group did (since U is artificial), the researchers showed counterfactual predictions as if the U group had been natural by bad things in the blood. Again, there were more bad things in the blood in the N kids. We cannot logically see the raw data here, since the U group did not do N.

14. We next see tables and plots of B and the bad things in the blood, the main point of the research. We no longer see the groups U and N, just the modeled levels of B or changed in B plotted or averaged against the bad things.

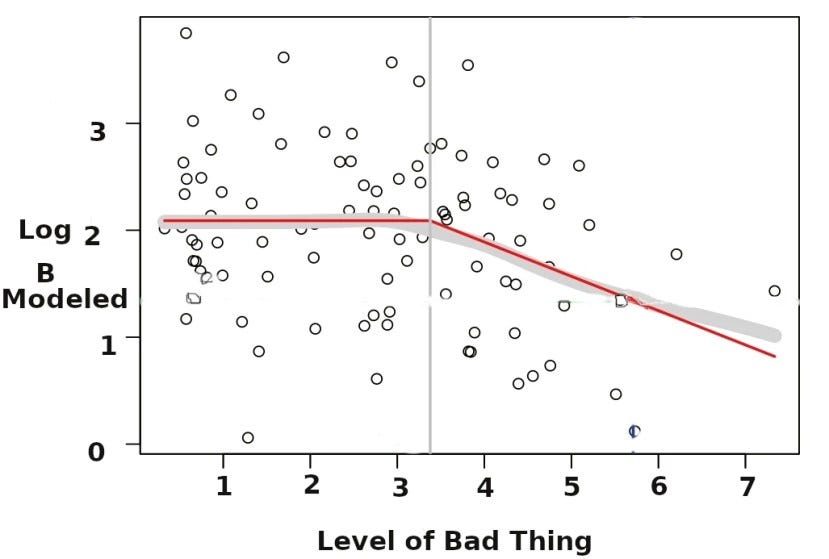

15. The models now have a step in them. That is, they are linear in the bad things up to a point, at which point the slope is allowed to change. The change point was chosen by another model. The scatterplot of logged modeled B against the bad things. The scatter is very wide, and the model fits are never that good. But there is a hint that higher values of the bad thing are associated, weakly, with lower levels of modeled B. And again we do not see the raw data and do not know which point belongs to U and which to N, nor do we see the number of procedures.

Here is one of the plots, modified to make cheating less easy. Please do not search for this.

The numbers have all been substituted and I erased identifying information from the plot. The y-axis is modeled logged values of B (the numbers are only labels), and the x-axis is level of bad thing. This is not the raw data. The red line is the second model; the gray bar the confidence interval on the model, and not the predictive interval. The other plots are very similar to this one.

16. The authors throw in some correlations with this and that which we can ignore. Most correlations are low (below an absolute value of 0.1), with a very few a bit higher (an absolute value of about 0.25).

17. From all this the authors conclude that there is a higher risk of malady from lower levels of B caused by the bad thing, regardless of number of procedures. And that being in group U is better than being in group N.

It turns out you might be able to regulate a few of the alleged bad things, though it would be costly. Would you? And that you could encourage U over N. Would you?

Do you think the conclusion that U is better than N is justified? Is the bad thing really bad? If you knew what the bad thing was would you avoid it? We’ll ignore the procedure here.

How confident are you the results are accurate, assuming no cheating?

Yes, there’s a lot you don’t know. I’ll answer questions, but I won’t reveal too much until next week. If anybody goes through with this.

Subscribe or donate to support this site and its wholly independent host using credit card click here. Or use the paid subscription at Substack. Cash App: $WilliamMBriggs. For Zelle, use my email: matt@wmbriggs.com, and please include yours so I know who to thank.

Not knowing a damned thing about statistical analysis (despite your best efforts Mr. SGT Briggs), I’m gonna go with a simple “study bad.” Looks like they did lots of data manipulation (not distinguishing U from N on the initial analysis, smoothing, modeling, etc.) so I imagine it would be difficult to conclude anything with certainty. Thus, my “study bad” conclusion.

When I was choosing a career all those years ago I wanted to be a statistician but I had too much personality so I became a tax accountant.

Just kidding.

“Any kid that had any kind of malady was excluded.“

And

“From all this the authors conclude that there is a higher risk of malady…”

Call me stupid, but this seems rather problematic, as they say in corporate-drone speak these days.

I’m going with a choice you did not give: “useless waste of time and (presumably) taxpayer money”.