This week traditionally is a slow week on the blog, so let me have a go at explaining something I've explained a few hundred times before, a thing which has not yet stuck. Maybe the pace of the day will help us.

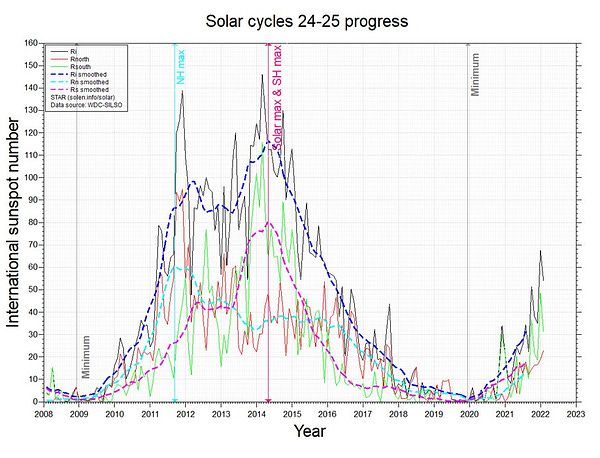

I enjoy collecting statistics (I use that word in its old-fashioned sense) like this:

Well, you tell me: do sunspots cause war? Would a "null hypothesis" significance test produce a wee p, thus "proving" the cause? Or not proving the cause, because we all know correlation isn't causation, so proving the correlation instead. Which didn't need proving, because the correlation is there, and is proof of itself. A large p doesn't make what is there disappear.

So the wee p doesn't prove causation and it doesn't prove correlation. What does it prove?

Nothing.

As I have quoted more times than I can recall, De Finetti in 1974 shouted "PROBABILITY DOES NOT EXIST." Most of his audience didn't understand him, and most still don't.

If probability doesn't exist, any procedure or mechanism or measure that purports to tell you about probability as if it is a thing that exists must therefore be wrong. Right?

P-values are measures, hypothesis tests are procedures, and some obscure machines (used to measure statistics) all purport to tell you about probability as if it is a thing that exists. Since probability does not exist, they are all therefore wrong.

Right?

That's the true, valid, and sound proof against p-values. But it doesn't stick. Many still have the vague belief that, yes, probability does exist, therefore there are "right ways" or "good ways" to use p-values. This is false. So let's use a deeper proof. Here is where you have to pay attention.

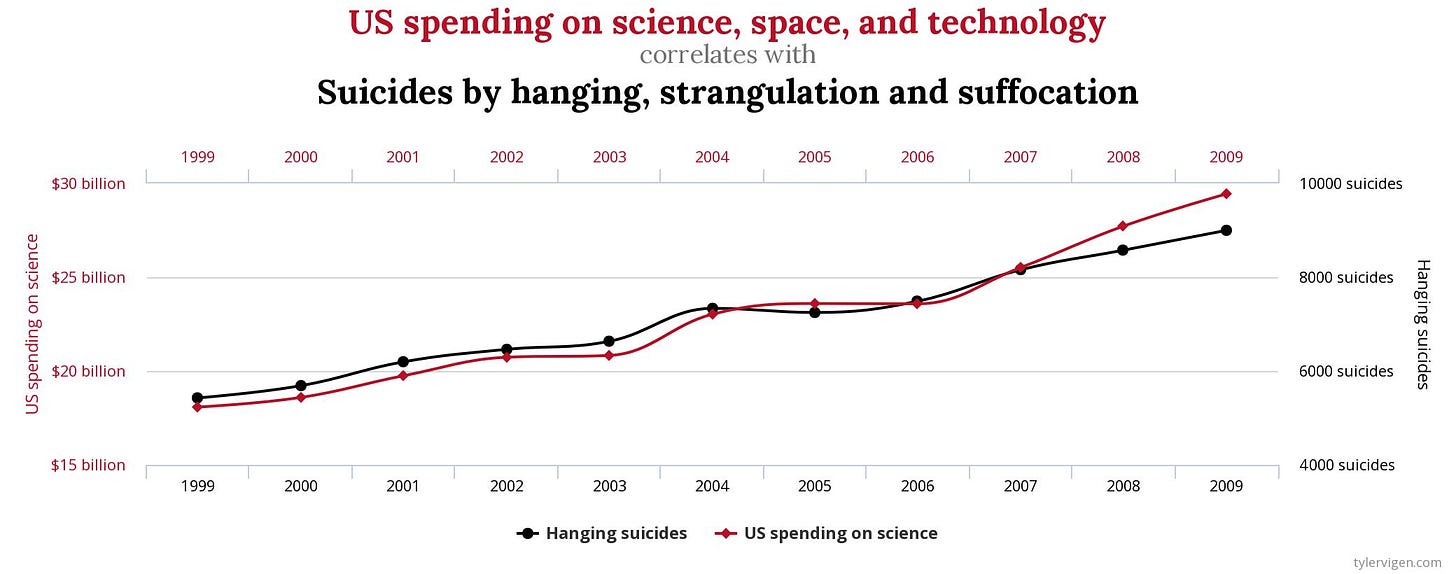

We've seen the site Spurious Correlations many times. Here's one of his plots, "US spending on science, space, and technology" with "Suicides by hanging, strangulation and suffocation."

It's clear that this correlation would give a wee p, or that it's easily extended so that it will. But we all recognize it's a silly correlation.

Why?

Because we bring in outside information that says there is no way one of these things would cause the other. Simple!

Problem is, there is no way to fit in "outside" information in frequentist theory. In old-fashioned, real old-school, non-frequentist, non-subjective probability, probability as logic, we gather all information we believe is relevant---and even more importantly, we ignore all evidence we believe is irrelevant---and then use it to judge the probability of some proposition. Here the proposition is that spending causes suicides, or suicides cause spending.

In notation: Pr(Y|X), where Y is the proposition and X all information we deem relevant---and the absence of all information we deem irrelevant.

What should surprise you is that there is no mechanism to do this in frequentism, which is the probability theory that treats probability as if it is a real thing.

In frequentism, the criterion for including or excluding evidence is the "significance" test. Stick with me here, because here's the subtle part. There are always (as in always) an infinite number of premises or evidence that we can add to the "X", in frequentism or in logical probability.

There is no guarantee, of course, that in any logical probability assessment, we have chosen the "right" X---and indeed the right X is that one that gives the full cause of Y (so that Pr(Y|right X) = 1). But we are free (and this is the semi-subjective nature of probability and decision) to pick what we like. When we like. Before taking data, after, whenever. And then asking what is Pr(Y|X)?

But there is no way to pick the "right" X for frequentism without subjecting every potential element of X to a significance test. Since there are an infinite number of X for any Y, and since hypothesis testing acknowledges spurious correlations can appear as legitimate (if the p is wee), then either every problem if carried out in strict accordance with frequentist theory will have an infinite number of spurious correlations, or the test will "saturate" at some point and the analysis has to end incomplete.

By "saturate" I mean the significance testing math breaks down with finite n: after adding too many premises, the testing can no longer be computed. I won't here explain the math of this, but all statisticians will understand this point.

There thus can be no genuine frequentist result, not one entirely consistent with the theory, unless the theory is abandoned at some point. And that point---which always comes---is when the analyst acts like a logical probabilist and excludes certain X.

As it is, the "frequentist" (the scare quotes indicate there is no true or pure such creature, but only approximations) will conduct "tests" on such X he picks, and ignore the tests for X he excludes. All "frequentists" are only partial frequentists. And even more partial than you think.

Here, with spending and suicides, even with a wee p, most "frequentists" would again reject the rejection of the "null"---that is, toss out the wee p---because other X are brought in from which it deduced the correlation is silly.

The "frequentist" will counter they only have to test the X they believe are relevant. But then they have to say how the winnowed down the infinite list to choose the "relevants". How did they do this? It can't be by testing. So there must exist procedures that are not testing that allow picking Xs and excluding Xs.

Read that over and over until it sinks in.

So why not use these other procedures all the time? Or you have to show us exactly precisely what is this strange inherent measure or procedure that says when to use testing, when to not to.

These questions are never answered. At most, we will hear "Well, there are still some good uses for p-values." To which I answer: no there aren't, and we just proved it.

Buy my new book and learn to argue against the regime: Everything You Believe Is Wrong.

Visit wmbriggs.com.

This is all ancient Roman Religion. The idea that wars, or political changes, can be "predicted" because of signs of the activity of the gods, who control the Sun, and the Wind, and the fertility of the Earth. They influence people to do this or that, because they are fighting among themselves, probably a case of adultery or incest and whatnot.

It's all wrong. But it sells well.

There is some truth: fight among humans are influenced by necessities. If there is a drought, and a group of people are expecting a famine, that expectance may influence them to move to another place, fight whoever they find, and steal their food. Which is a small war. But all big wars start small.

The falsity is that seeing a change is enough to know that there will be a disaster for sure, and this will affect the plans of men, who then will go to war. Not necessary at all. There is free will, no matter how many times they try to subtly kill it.