How Do You Know If An Experiment Works? Or, Yet Another Argument Against P-values

Listen to the podcast on YouTube, Bitchute, or Gab.

Anon asks questions about models (I added the bold question brackets and the link). Pay attention most closely to [Q2], which is our main point today.

Thank you for all your material regarding the topic of mathematical modeling.

I have read your paper called “Models Only Say What They’re Told to Say.” But unfortunately, I do not understand the theory of it. Would you like to elaborate a bit on it?

[Q1] If these definitions are correct: “Causal inference is focused on knowing what happens to Y when you change X. Prediction is focused on understanding the next Y given X (and whatever else you’ve got)”*, why do models only say what they are told to say?

[Q2] Let’s say I want to investigate the usefulness of wearing masks. So, I will measure the relevant factors concerning the virus. Then, I will implement the intervention, in this case, the wearing of masks. After that, I will do a measurement again. Would such research not provide the usefulness of the masks (assuming that the research design is correct)?

[Q3] Or let, in another setting, I want to predict the revenue of my bakery. Hence, I will use weather data, sales data, calendar data, and the like. This setting will provide me with valuable predictions that are approximately in concordance with reality. Hence, the bakery can create a better staffing schedule. How does your theory apply to this case?

I would love to hear from you.

God bless.

[Q1] Because they can’t say anything else. Here’s a simple model: “If input is between 0 and 7, print success, else print failure.” That model can only say success or failure, and nothing else. Because that is what we told it to say.

We accept also the premise that “Nothing breaks.” We could entertain a different premise, like, “The model might break, because the input goes haywire, and then anything goes“, or any of a set of similar premises. And even then the model only says what it is told to say conditional on whatever implied premises are deduced from that anything goes.

But there is no warrant to suppose any of these infinite number of additional premises.

The goal we should always have is understanding cause. If we knew the full cause (formal, material, efficient, and final) of some observable Y, even conditional on external circumstance X, then we’d know all there is to know about Y. See also this post on the difference between essential and empirical models.

But the point is simple: models can only say what they’re told to say, because they have no power to do anything else.

[Q2] This is an excellent question, and the heart of all research.

If you run any experiment, or take any observation, of Y (say, coronadoom infection), such that you assume the only difference in conditions is X_1 (masks on) or X_2 (masks off), then given that assumption, the only difference in Y, if there is one, must be caused by X.

Consider this carefully and slowly.

We have only three possibilities. (1) Y is the same regardless of X, (2) Y always changes the same way between X_1 and X_2, or (3) Y changes in different ways, even with the change from X_1 and X_2.

Under (1), you have proven, contingent on believing the only difference is X, that X has no bearing on Y. X is not causative of Y. You may dismiss X as interesting to Y. This is a very rare, to non-existent, case in contingent Y. Y is constant here!

I cannot stress enough that this is contingent on believing or accepting the only difference is X. We can’t, like in [Q1], assume additional premises that there are other possible causes, because we assumed X is the only possible difference.

Under (2), we have proven that whatever happens to Y under X_1 always happens to Y under X_1, and whatever happens to Y under X_2 always happens to Y under X_1.

If Y = no infection always when X_1, and Y = yes infection always when X_2, then we must conclude masks work. Contingent—and here is that same stress!—on the premise that X is the only possible cause.

These onlys and alwayss are strict as possible. No deviation in the least is allowed!

Under (3), we have proven the model that X is the only cause is false. We assumed X is the only cause, but Y varied such that sometimes Y was infection with mask, sometimes no infection with masks, and vice versa.

Masks might still be a cause against infection sometimes. But masks cannot be the only cause.

Consider your experiment showed 70% infections in the masks group, and 75% infection in the no masks group. If masks were the only cause, and prevented infections, then we should have seen 0% infections in masks group, and 100% infections in the no-masks group.

This wasn’t true, so that it cannot be masks are the only cause. There must be other causes. What are they?

We do not know!

We only made an assumption about masks being the only cause. We proved that that was false.

Again, there must be other causes at work. The results are consistent now with “masks sometimes work” and “masks never work”. The other causes, of which we are ignorant, must be explaining at least some of what we saw, and might explain all of what we saw.

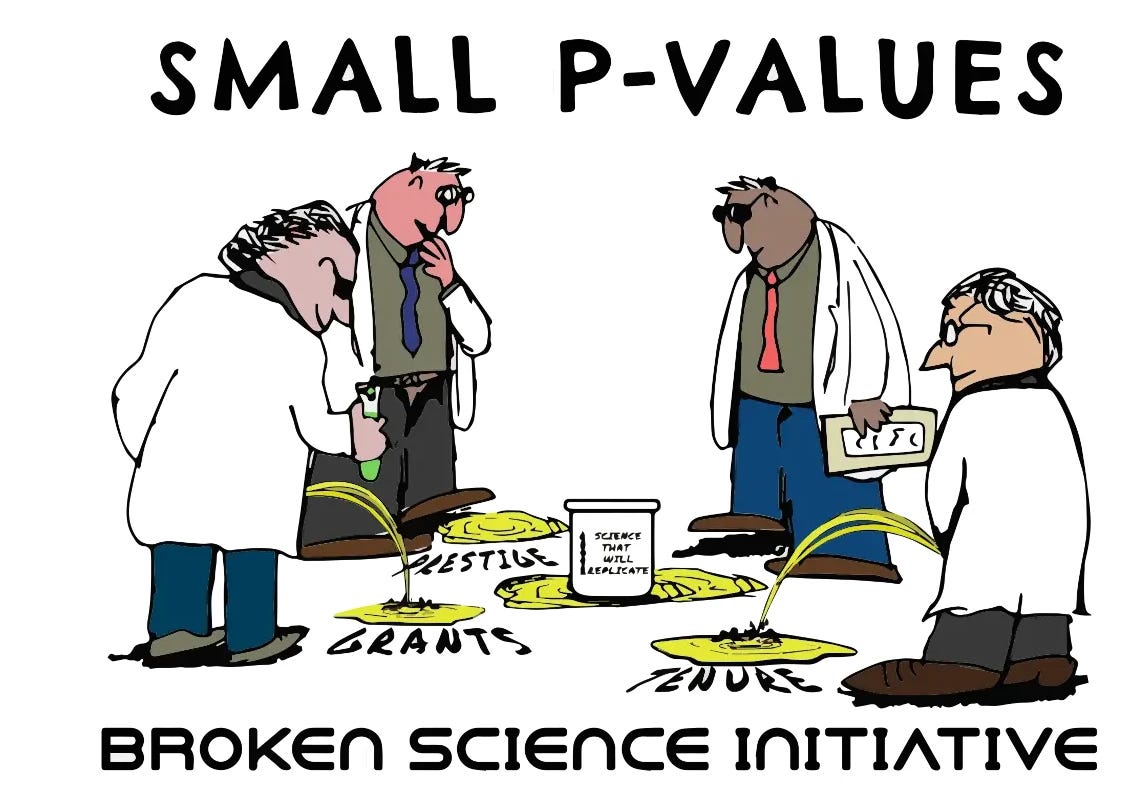

If you can see this, you see far. Incidentally, this is yet another proof for the silliness of hypothesis testing and p-values.

Now let’s see why despair is not warranted with your third question.

[Q3] We answer this the same as your second question. Your bakery model is of some form, and uses weather input and so on, and predicts revenue. When the inputs are all some identical value (each), the model will spit out the same prediction for revenue. Because that is what you told it do. Simple as that.

For masks, we can’t be sure about cause, but we can build a model based on what we saw and predict infections based on masks and no masks. It’s purely a correlation model, like your bakery model. But that doesn’t mean it can’t be useful.

We might look outside the model and investigate individual cases: what caused the revenue on this day, or what caused infection in this person. Huge problem! Not impossible, just very difficult.

Most scientists want to bypass that difficulty, and desire to conclude their correlational models proved cause. This is why they are always “rejecting nulls” and the like.

But it’s wrong.

Buy my new book and learn to argue against the regime: Everything You Believe Is Wrong.