Jaynes’s book (first part): https://bayes.wustl.edu/etj/prob/book.pdf

Permanent class page: https://www.wmbriggs.com/class/

Uncertainty & Probability Theory: The Logic of Science

Link to all Classes. Jaynes’s book (first part):

Video

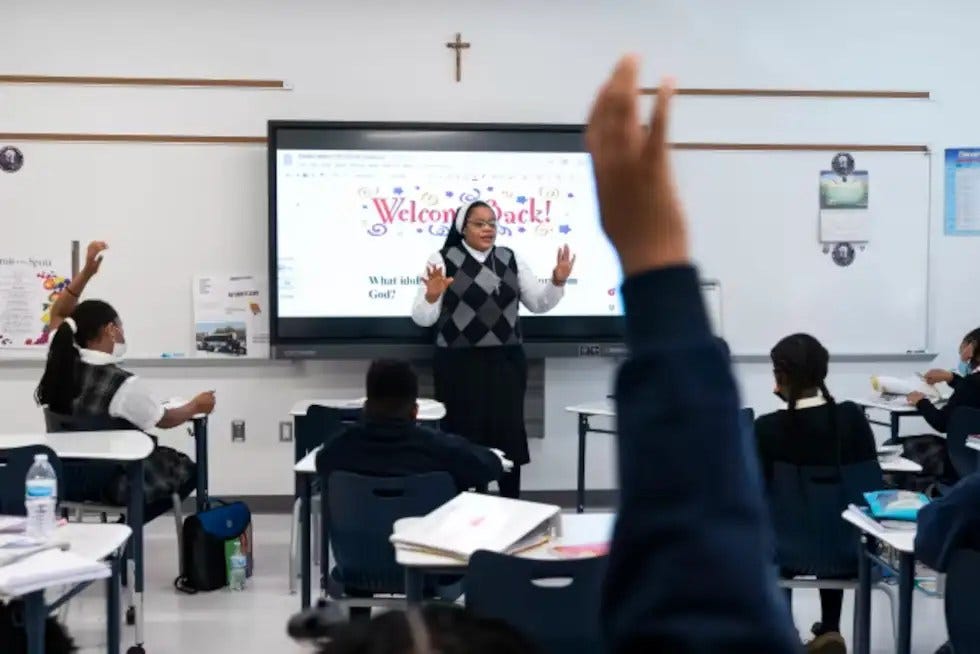

boy do i look mean

Links:

Bitchute (often a day or so behind, for whatever reason)

HOMEWORK: Read!

Lecture

This is an excerpt from Chapter 6 of Uncertainty. For readability, the citations have been removed.

Not a Cause

Randomness is not a cause. Neither is chance. It is always a mistake to say things like “explainable by chance”, “random change”, “the differences are random”, “unlikely to be due to chance”, “due to chance”, “sampling error”, and so forth. Mutations in biology are said to be “random”; quantum events are called “random”; variables are “random”, and all of these things take values because of chance. An entire theory in statistics is built around the erroneous idea that chance is a cause. This theory has resulted in much heartbreak, as we shall see.

Flip a coin. Many things caused that coin to come up heads or tails. The initial impetus, the strength of the gravitational field, the amount of spin, and so on, as we have discussed previously. If we knew these causes in advance, we could deduce—predict with certainty—the outcome. This isn’t in the least controversial. We know these causes exists; yet because we might not know them for this flip does not imbue the coin with any magical properties. The state of our mind does not effect the coin in any, say, psycho-kinetic sense.

Pick up a pencil and let it go mid air. What happened? It fell, because why? Because of gravity, we say, a cause with which we are all familiar. But the earth’s gravity isn’t the only force operating on the pencil; just the predominant one. We don’t consider the pencil falling to be “random” because we know the nature or essence of the cause and deduce the consequences. We need to speak more of what makes a causal versus probabilistic model, but a man standing in the middle of a field flipping a coin is thinking more probabilistically than the man dropping a pencil. Probabilities become substitutes for knowledge of causes, they do not become causes themselves.

The language of statistical “hypothesis testing” (in either its frequentist or Bayesian flavor with posteriors or Bayes factors; for the latter) is very often used in a causal sense even though this is not the intent of those theories. We must acknowledge that the vast majority of users of models of uncertainty think of them in causal terms, mistakenly attributing causes to variously ad hoc hypotheses or to “chance.” Attributing anything to “chance” is to attribute it to a chimera, a ghost. This kind of attribution language, which is nearly universal, also implies that the parts not attributable to “chance” are attributable to the other “variables” in the model in a causal sense, which is an unjustifiable stance, as we’ll see next Chapter.

Specific examples will be offered later, but for now suppose the user of a model of income has input race into that model, which occurs in two flavors, J and K (these letters are next to each other on my keyboard). The “null” hypothesis will be incorrectly stated as “there is no difference” between the races. We know this is false because if there were no difference between the races, we could not be able to discern the race of any individual. But maybe the user means “no difference in income” between the races. This is also likely false, because any measurement will almost surely show differences: the measured incomes of those of race J will not identically match the measured incomes of those of race K. Likewise, non-trivial functions of the income, like mean or median, between the races will also differ.

If the observed differences are small, in a sense to be explained in a moment, the “null” has been failed to be rejected; it is never accepted. Why this curious and baffling language is used is explained in the Causality Chapter when we discuss falsifiability. For now, all we need know is that small (but actual differences) in income will cause the “null” to be accepted. (Nobody really thinks in terms of failing to reject, despite what the theory says.) When the “null” is accepted it is repeated that there is “no” difference between the races, or that any differences we do see are “due to”, i.e. caused by, chance.

But chance isn’t a cause. Chance isn’t a thing. There is no chance present in physical objects: it cannot be extracted nor measured. It cannot be created; it cannot be destroyed. It isn’t an entity. The only possible meaning “due to chance” or “caused by chance” could have is magical, where the exact definition is allowed to vary from person to person, depending on their fancy.

Some thing or things caused each person measured to have the income he did. Race could have been one of these causes. An employer might have looked at an employee and said to himself, “This employee is of race K; therefore I shall increase his salary 3\% over the salary I would have offered a member of race J.” Or he might not have said it, but did it anyway, unthinkingly. Race here is a partial cause. The man did not receive his entire salary (I suppose) because he was of race K. This kind of partial cause might have happened to some, none, or all of the people measured. If the researcher is truly interested in this partial cause, then he would be better served to interview whoever it is that assigns salaries and so discover the causes of salary in each case. Assuming nobody lies or misremembers and can bring themselves to proper introspections—an assumption of enormous heftiness—this is the only way to assign causes. But actual measurements are time consuming and expensive and, if employed universally, would slow research down to a crawl. Results must needs be had! The advantage to taking this more measured pace would be that many results wouldn’t be absurd, like the results of many studies conducted with ordinary statistics surely are. Why? Researchers have been falsely taught that if certain statistical thresholds are crossed, causality is present. This fallacy is the cause of the harm spoken of above.

Even if the null is not rejected it is still possible that some or even all of the people measured had salaries in part assigned because of their race. There isn’t any way to tell looking only at the measured incomes and races. If the null is accepted, no person, it is believed, could have had their incomes caused partially by their race. Again, there isn’t any way to tell by looking only at the data. But when the null is accepted, almost all researchers will say that causality due to race is absent—replaced, impossibly, by chance; or the researcher bent of proving differences in cause will say, if the null is accepted, that the differences are still there, he just can’t now prove it. The truth is we have no idea and can have no idea, looking just at measured race and income and at nothing else why anybody got the salaries they did. To say we can is wild invention, to replace reality with wish.

On that latter point, when the null is accepted, but the researcher had rather not accept it, perhaps because his hypothesis was consonant with his well being or it was friendly to some pre-conception, he immediately reaches to factors outside the measured data. “Well, I accepted the null, but you have to consider this was a population of new hires.” That may be the case, but since that evidence did not form part of the premises of the model, it is irrelevant if we want to judge the situation based on the output of the model. I have much more to say on this when discussing models. It is anyway obvious, that, to his credit, the researcher is looking for causes. Even if he gets them wrong, that is always the goal, or should be.

Another popular fallacy, when “nulls” are rejected, is the I-can’t-think-of-another-reason-so-my-explanation-is-correct fallacy. If the classical (or any) procedure says there are (which we could have known just by looking) differences, then the researcher will say those differences are caused by the differences in race. He will assume his cause always applies, or at least it mostly or usually applies. Yet he never will have measured any cause, so he is being boastful, especially considering how easy it is to reject “nulls”. Later when discussing hypothesis tests, I cover this false dichotomy in more details.

Aristotle (2 Physics v) gives this example of what people mean when they say “caused by chance”:

Some people even question whether [chance and spontaneity] are real or not. They say that nothing happens by chance, but that everything which we ascribe to chance or spontaneity has some definite cause, e.g. coming “by chance” into the market and finding there a man whom one wanted but did not expect to meet is due to one’s wish to go and buy in the market…

A man is engaged in collecting subscriptions for a feast. He would have gone to such and such a place for the purpose of getting the money, if he had known. [But he] actually went there for another purpose and it was only incidentally that he got his money by going there; and this was not due to the fact that he went there as a rule or necessarily, nor is the end effected (getting the money) a cause present in himself — it belongs to the class of things that are intentional and the result of intelligent deliberation. It is when these conditions are satisfied that the man is said to have gone “by chance”. If he had gone of deliberate purpose and for the sake of this—if he always or normally went there when he was collecting payments—he would not be said to have gone ‘by chance’.

Notice that chance here is not an ontological (material) thing or force, but a description or a statement of our understanding (of a cause). Aristotle concludes, “It is clear then that chance is an incidental cause in the sphere of those actions for the sake of something which involve purpose. Intelligent reflection, then, and chance are in the same sphere, for purpose implies intelligent reflection.” And “Things do, in a way, occur by chance, for they occur incidentally and chance is an incidental cause. But strictly it is not the cause—without qualification—of anything; for instance, a house-builder is the cause of a house; incidentally, a flute player may be so”. Chance used this way is like the way we use coincidence.

There is also spontaneity, which is similar: “The stone that struck the man did not fall for the purpose of striking him; therefore it fell spontaneously, because it might have fallen by the action of an agent and for the purpose of striking.” But this does not mean that nothing caused the stone to fall. It could very well be that the stone was made to fall by some wilful agency, as many might imagine, but because we have no evidence of this, save our suspicions, we can’t be sure. We can have faith that the stone was sent by God for some purpose, we can have superstition that some evil entity caused the tumble, we can believe it was just “one of those things”. Which is the right attitude, faith, superstition, disbelief? It can’t be known from the concurrence alone. Just like the in the race-income example, we have to look outside the “data”. This necessity is ever present. Data alone are meaningless.

Persi Diaconis and Fred Mosteller provide a well known definition of coincidence: “A coincidence is a surprising concurrence of events, perceived as meaningfully related, with no apparent causal connection”. The phrase “no apparent causal connection” is apt but incomplete, as we now see. Coincidences are rather taken to prove causation, by whom or what we might not know but only suspect.

Lastly, again Aristotle, “Now since nothing which is incidental is prior to what is per se, it is clear that no incidental cause can be prior to a cause per se. Spontaneity and chance, therefore, are posterior to intelligence and nature. Hence, however true it may be that the heavens are due to spontaneity, it will still be true that intelligence and nature will be prior causes of this and of many things in it besides.” In other words, “posterior to intelligence and nature” means they come after as explanations and not prior as causes (Bayesians ought to take pleasure in that choice of words). The language of calling chance, randomness, and spontaneity explanations is risky because explanation quickly becomes caused, and, as just said, we can’t know the “higher” cause of any event just by examining the data at hand. It is thus better to avoid the words altogether, especially in science where the goal is to understand cause, unless one can be exceedingly careful.

Subscribe or donate to support this site and its wholly independent host using credit card click here. Or use the paid subscription at Substack. Cash App: $WilliamMBriggs. For Zelle, use my email: matt@wmbriggs.com, and please include yours so I know who to thank.

"boy do i look mean" It's Monday morning.

Carl Jung thought that synchronistic events are not just random occurrences but rather manifestations of an acausal connecting principle. Coincidences are simply contemporaneous occurences with no inherent significance, while synchronistic events have a deeper meaning that resonates within the individual's psyche.

Marie Louise von Franz once spoke of a synchronistic event that happened to a client. Keep in mind that this was in the 1950s in Europe. The client had ordered a blue dress from a shop and the dress arrived on the same day that she received notice of the death of a friend and the subsequent funeral. The shop sent a black dress rather than the blue one that had been ordered. Without the connection to the funeral, this would simply have been a mistake on the part of the shop, however, given the personal connection to the upcoming funeral and the need for a black dress, this becomes more than a coincidence and is, I think, an example of meaningful synchronicity.

When we see strong correlations in large sample sizes, are we obliged to conclude they are causally connected (at least in part if not wholly)?

Or is this tangential to the discussion, since knowing there must be a cause and knowing what the cause is, are different topics?