Class 18: Probability Is Not Propensity or Physical

Uncertainty & Probability Theory: The Logic of Science

Link to all Classes. Jaynes's book (first part):

Links:

Bitchute (often a day or so behind, for whatever reason)

HOMEWORK: Read the excerpt. I mean it: read it!

Lecture

This is an excerpt from Chapter 5 of Uncertainty.All the references have been removed. Some LaTeX markup remains, because Substack can’t interpret it in-line.

Logic is not an ontological property of things. You cannot, for instance, extract a syllogism from the existence of an object; that syllogism is not somehow buried deep in the folds of the object waiting to be measured by some sophisticated apparatus. Logic is the relation between propositions, and these relations are not physical. A building can be twice as high as another building; the "twice" is the relation, but what exists physically are only the two buildings. Probability is also the relation between sets of propositions, so it too cannot be physical. Once propositions are set, the relation between them is also set and is a deducible consequence, i.e. the relation is not subjective, a matter of opinion. Mathematical equations are lifeless creatures; they do not "come alive" until they are interpreted, so that probability cannot be an equation. It is a matter of our understanding.

So called subjective probability is therefore a fallacy. The most common interpretation of probability, limiting relative frequency, also confuses ontology with epistemology and therefore gives rise to many fallacies. Some authors are keen on not declaring any single definition of probability, and are willing to say that probability changes on demand, but this argument is, as far as I can tell, aesthetical and not logical. Probability like truth and (Aristotelian, or "meta") logic is unchanging regardless of application.

Probability Is Not Physical

Here is perhaps the simplest demonstration that probability is not a physical property. Take the game of craps, played with two six-sided dice. On the come-out, the shooter wins with a 7 or 11. The two-dice total will come to something, possibly this 7 or 11. Is this total caused by "chance" or probability? What we know is that the two-dice total is constrained to be a number between 2 and 12 inclusive. If the only---I mean this word in its most literal sense---information that we have is that X = "There will be a game played which will display a number between 2 and 12 inclusive", then we can quantify our uncertainty in this number, which is that each number has probability 1/11 of showing (there are 11 numbers in 2 to 12, the statistical syllogism provides the rest). If you imagine that this total is made by two dice, then you are using more information than is provided. With only X, which is silent on how the total is produced, silent on dice, and silent on many other things, then the probability is 1/11 for every total.

Craps players have more information than X. They know the total can be from 2 to 12, but they also know the various ways how the total can be constructed, e.g. 1 + 1, 1 + 2, 2+ 1, ..., 6 + 6. There are 36 different ways to get a total using this new information, and since some of the totals are identical, the probability is different for different totals. For example, snake eyes, 1 + 1, has 1/36 probability.

It should now be clear that these different probabilities are not a property of the dice (or the dice and table and shooter, etc.). If probability were a physical property, then it must be that the total of 2 has 1/11 physical probability and 1/36 physical probability! How does it choose between them? Quantum mechanical wave collapses? No, the parsimonious solution is that probability is a state of mind; rather, it states our uncertainty given specific information. Change the information, change the probability.

There have been many attempts to tie probability to physical chance or propensity; for a discussion of both which doesn't quite reach a conclusion. I think all of these fail. Let A be some apparatus or experimental setup; the things and conditions which work towards producing some effect P, which is, as ever, some proposition of interest. For example A could be that milieu in which a coin is flipped, a milieu which includes the person doing the deed, the characteristics of the coin, the physical environment, and so forth. P = "A head shows." A theory of physical chance might offer $\Pr(\mbox{P}| \mbox{A})\equiv p$, which is considered a property of the system (which I mark with the equivalence relation). Few deny that the argumentative or logical probability of P, perhaps also given A, would have the same value, but the argumentative probability is acknowledged properly as epistemological whereas the equivalence is seen as a physical essence in the same way length or mass might be. Indeed, Lewis's so-called Principal Principle (sometimes also called Miller's Principle) states that the logical probability should equal the the physical chance (in my notation; see the definition provided byHowson and Urbach):

The conditions (premises) A are not seen as causative per se; they only contribute to the efficient cause of P (or not-P). What actually causes P? Well, according to this principle, p: the probability itself. Nobody makes that statement blatantly, but that is what is implied by physical chance. This p does not act alone, it is felt, it is a guiding force, it is a mystical energy. This also is never explicitly stated by it supporters. Physical probability is mysterious, mystical, even. Yet it doesn't operate alone; it requires the catalyst A: A allows p to operate as it will, sometimes this way, sometimes that, or sometimes, as in some quantum theories, an infinite number of ways. Just how p rises from it hidden depths and operates, how it chooses which cause to invoke---which side of P to be on, so to speak---is a mystery, or, as is again sometimes claimed in quantum theories, there is no cause of P and p is its name.

The cause of an event cannot be logic, a claim with which any but strict idealists would agree. And since probability is logic, at least sometimes, as chance theorists admit, probability-as-logic cannot be a cause. Therefore, unless there be no cause of an event, if we are to save, as we must, the principle of causation, in at least some situations chance itself must be the cause, or contribute to the overall cause. Yet saying chance is a cause is saying Fortuna herself still meddles in human affairs!

As already detailed in Chapter 4, if we knew the initial conditions of a coin flip and knew the forces operating on the coin, we could predict with certainty whether (say) P = "Head" is true. This knowledge is not the cause. Neither are the initial conditions the cause. The equations with which we represent the motion of the spinning object are not the cause; these beautiful things are mere representations of our knowledge of part of the cause. No: the coin itself, its handler, and the environment are the material cause; the formal cause is the flip, the physical forces themselves are the efficient cause of the outcome, and final cause is the object of the flip, the Head itself. (I investigate cause more fully in Chapter 6.)

It is obvious enough that because a man does not know the cause of some effect, that it is a fallacy to say the effect has no cause. It follows that because two men do not know the cause, that it is also a fallacy to say the effect has no cause, and so on for all men. Ignorance itself cannot be a cause. Ignorance is the absence of knowledge, a nothingness, and nothing cannot be a cause. Nothing is no thing. Nothing has no power whatsoever: it is nothing.

Something caused P to be ontologically true or false. If P were observable, that is; i.e. empirical. Nothing is causing, or can cause, Martians to wear hats. This won't be the last time where we observe a decided empirical bias in the treatment of probability. Somehow that bias never was taken up in logic, a subject an enterprizing historian can tackle.

Now if A were a complete description of the coin flip then p would be 0 or 1 and no other number. We would know whether P was true or false. In everyday coin flips we don't know what the outcome will be; our premises are limited. But some complete A does exist---it would not be proper to call the premises of A "hidden variables"; instead, they are just unknown premises---because some thing or things cause the outcome. And because this is true, it implies that physical chance is real enough, but it only has extreme probabilities (0 or 1). In everyday coin flips we have, or should have, the idea that the conditions change from flip to flip, and that whatever is causing P or not-P is dependent on these changes. Our knowledge of these changes might be minimal or nonexistent, but that means nothing to whatever causes are operating on the coin.

The study of chaos is instructive, which in the simplest form is defined as sensitivity to initial conditions. Causality is not eschewed in chaos theory; indeed, it is often known precisely what the causes are. Equations in chaos theory are fully determinative: if the initial conditions and values of all constants are known---as in the logical {\it known} and not some colloquial loose more-or-less sense---then the progress of the function is also known precisely. A chaotic equation is thus just like a non-chaotic one, when it comes to knowing what determines what. We know what determines the value $f_t$ for any $t$ if $f_t= f_{t-1} + 1$ and $f_0 = 1$; that which determines it is $f_t= f_{t-1} + 1$. This determination does not disappear because it is, for example, hidden from view and only (say) a picture $f_t$ is shown. Again, because some individual does not know the causes of $f_t$, or what determines it, does not imply that the causes or determinations do not exist.

Another point needs clarification. You will often hear of a series of data, usually time series, that a model which represents it is wrong because the model does not account for "chaos." We'll later discuss models in much more detail, but this view is incorrect. Any model which fails to predict perfectly would be wrong (predicts non-extreme probabilities) on this view. Like probability, models only encapsulate what we know, not everything that can be known. Models, we will learn, do not have to be causal or determinative to provide accurate (in a sense to be defined) forecast.

Imperfect models are as good as we can get with quantum systems. The strangeness of quantum mechanics has led to two incorrect beliefs. The first is that since we cannot predict with perfect accuracy, no cause exists. The second is that this non-cause which is a cause after all is probability. Here is Stanley Jaki quoting Turner: "Every argument that, since change cannot be 'determined' in the sense of 'ascertained' it is therefore not 'determined' in the absolutely different sense of `caused', is a fallacy of equivocation." Jaki says that this fallacy "has become the very dubious backbone of all claims that epistemology is to be drastically reformulated in terms of quantum mechanics, including its latest refinements of Bell's theorem."

Contrary to what is sometimes read, Bell did not prove causes of QM events do not exist. He only showed that, in certain arrangements, locality must be false. Local efficient causes therefore do not always exist (this assumes we accept all the other standard QM premises). But, for example in a crude summary of a typical QM EPR experiment, if with an entangled pair of particles one is measured to be"spin up" along its "x-axis" while the other instantaneously, and at a great distance away, becomes "spin down", some thing or things still caused these measurements. Locality is violated. What "paradoxes" like this show is that the cause cannot be localized to the places the measurements are taking place. They do not show, and cannot show, nothing caused the measurements. Nothing cannot be a cause. Nothing is the absence of causes. Some physicists however, are determined to believe causes aren't real. I'll discuss this view more later.

Bell did not even show that we can never know a QM event's causes. Since this isn't a book on quantum physics, I leave aside the question whether such a proof exists. I tend to think it might, even though the mind of the First Cause is almost surely closed off to us. Meanwhile, QM theory allows us to make good predictions, i.e. specify the probabilities of events. We can say these probabilities "determine" the events in the sense of "ascertain", but we cannot say the probabilities "determines" the outcomes in the sense of "caused." If, after working through the math and fixing the values of some constants, like Planck's---these are our premises---we predict (say) there is an 82% chance a particle will be in a certain region of space when measured, and we subsequently measure it there, that 82% itself did not cause the value of the measurement. That 82% did not cause the probability to "collapse". How could a probability make itself "collapse"?

All we know with QM is that in experiments where we know as many premises as it is possible to know (we think), we can make excellent predictions. But we can do the same in coin flips or dice throws; indeed, casinos make a living doing just that. That systems exhibit stability does not mean that probabilities are causes. Here's another proof. Repeatedly let go of apples from the top of a tall building. We can work out the theory that says the apple will fall with a 100% chance. It wasn't the 100% chance that caused the apples to fall, it was gravity.

One of latest attempts to avoid admitting defeat about knowledge of QM causes is Everett's Many Worlds (there are other similar attempts). This can be paraphrased as when the wave-function of each of every object which "collapses" (when it "collapses"), it does so across "many worlds", such that each possible value of "collapse" is realized in one (or every one) of these worlds. The number of "worlds" thus required for this theory since the beginning of universe is a number so large that it rivals infinity, especially considering that wave-function equations are typically computed on a continuum. Even if this theory were true, and I frankly think it is not, it doesn't change a thing. Many worlds does not say, and cannot say, why this wave-function "collapsed" to this value in this world. Some cause still must have made it happen here-and-now. Many World's is an ontological theory, anyway. Murray Gell-Mann, for instance, offers a purely epistemological view of that theory which better accords with my view. This section also does not imply the wave-function does not exist, in some sense, as argued in (RinDuf2015); anyway, the probabilities derived from wave-functions are not the wave-functions themselves, but the probabilities are conditioned on the wave-functions, just as all probabilities are conditioned on something. Probabilities derived from wave-functions are just that: derivations and are not the wave-functions themselves, though some (if I understand them correctly) do make this claim.

Subscribe or donate to support this site and its wholly independent host using credit card click here. Or use the paid subscription at Substack. Cash App: $WilliamMBriggs. For Zelle, use my email: matt@wmbriggs.com, and please include yours so I know who to thank.

Probability is a measure of ignorance of initial states?

>>God cannot be surprised (He even gives us a clue: "I know the end FROM the beginning").

In the legitimate courts of law, the process is designed to avoid error, not to ascertain truth. A not guilty verdict is a statement of our ignorance of events, not of our knowledge of innocence.

In science, repeated identical results are not proofs of eternal verity ("the sun also rises") but of insufficient sample size, ie ignorance.

In all cases, being finite in knowledge, wisdom and longevity, a degree of humility before that which is greater than we, is indicated.

Hence the greatest of scientists have historically been Christians, people already inclined to such humility ("thinking God's thoughts after Him").

The worst scientific (and thus, statistical) blunders have been made by those possessing unwarranted certainty in their own knowledge.

For example, even assuming that the Hubble Constant measures something akin to what Hubble supposed, it is somewhat dubious to extrapolate from a sample of several decades to 14.6 billion years. Would any of you believe I could measure the opinions of the entirety of our planet by surveying 100 people?

What would I be measuring? Would the error bars ever possibly be appreciably smaller than the whole? Whatever the result, of what practical or theoretical use would it be, except as an example of boundless hubris?

Sadly I sit out the classes.

Because try as I might, cannot make heads nor tails of the stuff, due to a certain flavor of mental retardation that surfaces whenever I look at such content or any page containing more than 5 numerals, never mind equations, which trigger a grand mal seizure.

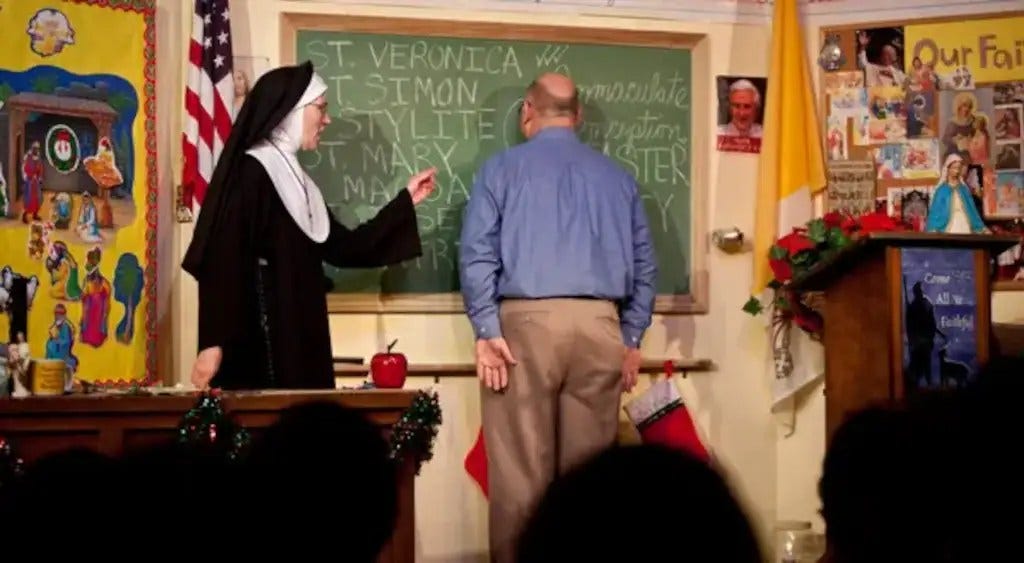

However, before the photo of the Catholic nun at the blackboard passes into history I wanted to say how much it tickles me every single time I see it. Nobody who did not go to Catholic school in olden times can fully appreciate that photograph. Thanks for using it!