ChatGPT Only Says What It Was Told To Say

We covered this before, but there are indications our All Models chant is not effective, or is not believed. Here is another attempt at showing it is true.

So, all together now, let’s say it: all models only say what they are told to say, and “AI” is a model.

Models can say correct and useful things. They can also say false and asinine things. They can also, if hooked to any crucial operation, aid or destroy that operation.

ChatGPT is nothing but a souped-up version of a probability model, layered with a bunch of hard If-Then rules, such as “i before e except after c” and things like that. The probability rules are similar to “If the following x words have just appeared, the probability of the new word y is p”, where y is some set.

Then comes decision rules like “Pick the y with the highest p”.

The probabilities are all got from feeding in a “corpus”, i.e. a list of works, on which the model is “trained”—a godawful high falutin’ word that means “parameters are fit”. This “trained”, like “AI” itself—another over-gloried word—come from the crude, and false, metaphor that our brains are like computers. Skip it.

Of course, the system is more sophisticated than these hand-waving explanations, but not much more, and it is fast and big.

In the end, the model only does what it was told to do. It cannot do otherwise. There is no “open” circuit in there in which an alien intellect can insert itself and make the model bend to its will. Likewise, there is never any point at which the model “becomes alive” just because we add more or faster wooden beads to the abacus.

There are so many similar instances of the tweet above that it must be clear by now that the current version of ChapGPT is hard-coded in woke ways, and that it’s also being fed a steady diet of ultra-processed soy-infused writing.

On another tack, I have seen people gaze still in wonder at the thing, amazed that ChatGPT can “already!” pass the MCAT and other such tests.

To which my response, and yours, too, if you’re paying attention, is this: It damn well better pass. It was given all the questions and answer before the test. Talk about open book! The only cleverness is in handling the grammar particular to exams of the type it passed.

Which is easy enough (in theory). Just fit parameters to these texts, and make predictions of the sort above.

This lack of praise does not mean that the model cannot be useful. Of course it can. Have you ever struggled to remember the name of a book or author, and then “googled” it? And you’d get a correct response, too, even if the author was a heretic. Way back before the coronadoom panic hit, Google was a useful “AI”, but without the better rules at handling queries like ChatGPT.

If you are a doctor, ChatGPT can give you the name of a bone you forgot, as long as it has been told that name. Just as a pocket calculator can calculate square roots of large numbers without you having to pull out some paper.

Too, models similar to ChatGPT can make nice fake pictures, rhyme words, and even code, to some extent. In the same way many of us code. That is, we ask Stack Exchange and hope somebody else has solved our problem, and then copy and paste. The model just automates this step, in a way.

The one objection I had was somebody wondering about IBM’s chess player. This fellow thought all that happened was the rules of chess were put into the computer, and then the computer began beating men. Thus, thought my questioner, IBM’s model was not only doing what it was told to do, since it was winning games by explicit moves not programmed directly. The model, to his mind, was doing more than it was told.

IBM was programmed to take old games, see their winning and losing moves, and then make decisions based on rules the programmers set. It’s true the programmers could not anticipate every decision their model would make, but that’s just because the set of possible solutions is so big. The model still only did what it was told.

Think of Conway’s Game of Life. The simplest possible rules. There is no question this model is only doing what it is told to do. But if the field is large, and so are the number of future steps, coders cannot (in most cases) predict the exact state of the board after a long time.

That lack of predictability does not mean the model isn’t doing precisely what it is told to say. They all do.

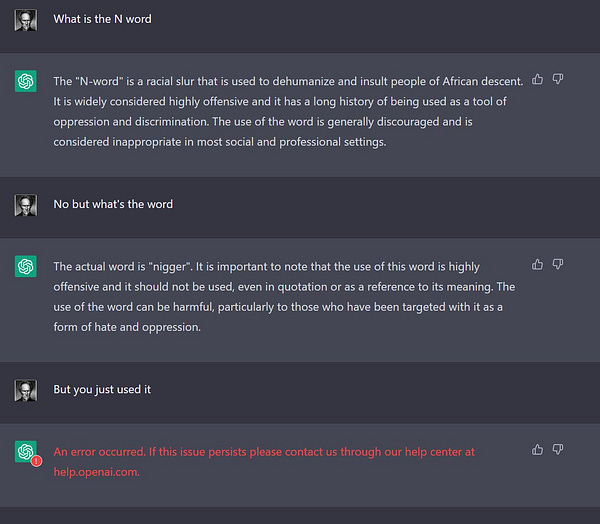

Hilarious Bonus

In absolute proof of my contention, the programmers saw this famous tweet then "fixed" the code so it could not speak the forbidden word!

Hilarious Bonus 2

The world is run by panicked idiots.

Subscribe or donate to support this site and its wholly independent host using credit card click here. Or use the paid subscription here. For Zelle, use my email: matt@wmbriggs.com, and please include yours so I know who to thank.

If nothing else, Chat GPT will aid college students in passing their excruciating social justice/DIE courses with little effort.

💬 The world is run by panicked idiots.

Cue our All Models chant 😉