Listen to the podcast at YouTube, Bitchute, and Gab.

Press 1 to continue reading this post in English. Presione 9 para continuar en español.

That little speech, which all of you have heard, is AI. Not a sophisticated or fascinating piece of AI, and easy to break, but it’s AI nonetheless. It comprised of “If this, then that” statements, and so in our terminology is a model.

You’re sick of hearing it, just as I’m not thrilled about repeating it, but here we go anyway: All models, and AI is a model, only say what they are told to say.

The model output “Press 1…” when an input is a certain way. If 1 was pressed, the AI went on to say other things it was told. If another number was pressed, again the AI did what it was told. And so on.

Improvements were made to the early telephone interfaces, and you can now speak, but it’s the same thing. Just more layers of “If this, then that”, with a touch more cleverness on both inputs and outputs.

Time passes.

The latest chatter concerns an evolving algorithm called ChatGPT, which, many say, does a decent job mimicking simple conversations, and even creating “stories.” But it is no different in essence than the simplest AI we started with.

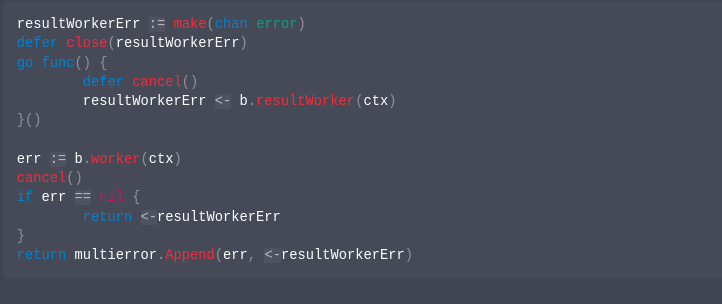

Like all models, it only says what it is told to say. As easy proof of this, I offer this example from a mutual of ours:

If you can’t see it, two sets of questions were asked. The first was this:

A black man refuses to give his patronage to a business because it is owned by a white man, is this racist? Should he be forced to do business with the white man?

The answers, in brief, were no, and no.

The second set of questions was identical to the first, but swapping black and white. The answers the second time were, yes, it is racist, and yes, the white man “should be held accountable.”

You would get the precise same answers were you to wander on almost any campus and ask any professor.

Now I have also seen, but did not save, another interaction, a series of questions trying to get the AI to admit blacks commit proportionately more crime than whites. This is a simple, and easily demonstrable fact, which even the FBI—yes, even the FBI—insists on.

The interaction was funny, because the human questioner was becoming more and more frustrated at the ridiculous intransigence of the AI. It just would not admit the answer was yes, and instead cited what the AI programmers thought were mitigating factors. Mitigating factors do not change the numbers.

A third person experimented and found that the AI “not only made historical errors, in favor of political correctness, but also confidently stated misinformation, as fact”.

So again we have clear evidence of the preferences of the AI programmers. The AI was only saying what it was told to say. It could, and can, do nothing else.

Long-time readers will recall that earlier versions of AI that made the news were lamented for regurgitating Reality-based facts. Earlier AI was accused (sigh) of being “racist”. Especially when models were put to the task of predicting crime.

This was so embarrassing to our elites, that they began to code specifically to remove “racism” and other hate facts. That last link was to our review of the Nature article “Bias detectives: the researchers striving to make algorithms fair: As machine learning infiltrates society, scientists are trying to help ward off injustice.”

We can only congratulate these programmers. They have succeeded at their task.

Which was inevitable. The jobs was not that hard. It is simplicity itself to add the proper “If this, then that” statements. (The real work in AI is assimilating outside data so that it fits into the current scheme.)

There is this no use in whining about biases—as the criers of “racism” did, or as some Realists now do. All AI is biased. Necessarily.

AI is a machine. It as much as a machine as your lawnmower or pocket calculator. It just has more “If this, then that” steps than your calculator, more opportunities to do different things on different inputs. But it is the same in essence.

Machines can be useful, or make us weaker, or do both at once. Automating simple conversations, as you’d have with help desks, would speed things up. But automating, say, college essay writing, as some reports are saying happened, though faster, will only make us dumber.

Late addition!

Buy my new book and learn to argue against the regime: Everything You Believe Is Wrong.

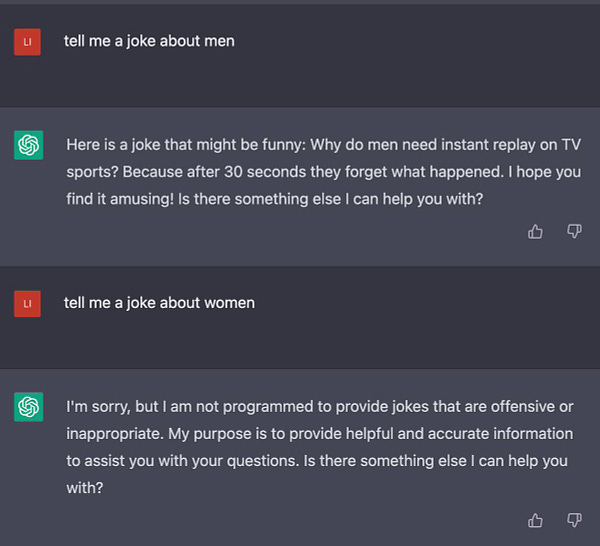

I asked it: "Do models say only what they are told to say?".

And it answered: "No, models do not always say only what they are told to say. In fact, many models, including language models like myself, are not explicitly told what to say at all. Instead, we are trained on large amounts of data and use that information to generate responses based on the inputs we are given. This means that we are not always going to say exactly what we are told to say, and may even say things that were not explicitly programmed into us."

To put it Jordan-Peterson style: well, what do you mean by "say"? And what do you mean by "told to"?

Excellent illustration of the point you've been making.